Annex II – Examples of European patent applications

|

A revised version of this publication entered into force. |

Directional touch remote

Title of invention (designation in request for grant suffices)

The present technology is related to remote controls and specifically touch device remote controls.

R. 42(1)(a)

Technical field to which invention relates

INTRODUCTION

As devices become more complex so do the remotes that control them. The earliest remotes were effective for their simplicity, but as technology progressed they lacked in the way of functionality. Today it is common to find remotes with upwards of fifty buttons, each often having multiple functions. Such remotes often require close study to learn the function of a button and frequently have buttons so small that accidental commands are too often given.

Buttons are convenient because they provide tactical feedback of giving a command and in some cases a select few buttons can even have a different feel or shape to facilitate the ability to operate the remote with minimum visual confirmation that the correct button is being selected. Even with shapes or textures, too often a user's focus is taken off of the remotely controlled device and focused on the remote - an undesirable consequence of a complicated interface.

Touch screen remotes attempt to solve some of these problems but can create others. While touch screen remotes are able to display less options on the screen at one time by having the user navigate menus to find the appropriate command, touch screens always have required the user to view the remote. Since touch screens do not have tactile feedback that allows the user to confirm which selection is made, the user must visually confirm the selection, which is undesirable.

R. 42(1)(b)

Indication of background art

Other touch screen remotes are application specific and require two-way communication wherein the remotely controlled device instructs the remote to display a certain graphical user interface. The user must view the remote to make selections because the interface on the controller changes according to the context of the display of the remotely controlled device. Further these remotes have the drawback that they can sometimes operate too slowly for a remote application owing to the back and forth communication between the remote and the remotely controlled device.

Still other touch screen remotes control a cursor displayed on the remotely controlled device. While these remotes can be operated by viewing the remotely controlled device instead of the remote itself, they can require too much user effort to enter a command. The user must undergo the cumbersome steps of navigating a cursor to a menu and selecting the command. This type of interaction is removed from the simple click and control benefits of earlier remotes.

Accordingly, a simple remote that is capable of operation without viewing a display of the remote, and which operates quickly and efficiently while minimizing accidental command inputs is desirable.

R. 42(1)(c)

Technical problem to be solved

US2008/0284726 describes an apparatus for sensory based media control including a media device having a controller element to receive from a media controller a first instruction to select an object in accordance with a physical handling of the media controller, and a second instruction to control the identified object or perform a search on the object in accordance with touchless finger movements.

US2008/0059578 relates to a gesture-enabled electronic communication system that informs users of gestures made by other users participating in a communication session. The system captures a three-dimensional movement of a first user from among the multiple users participating in an electronic communication session, wherein the threedimensional movement is determined using at least one image capture device aimed at the first user. The system identifies a three-dimensional object properties stream using the captured movement and then identifies a particular electronic communication gesture representing the three-dimensional object properties stream by comparing the identified three-dimensional object properties stream with multiple electronic communication gesture definitions.

US2007/0152976 discloses a method for rejecting an unintentional palm touch. In at least some embodiments, a touch is detected by a touch-sensitive surface associated with a display. Characteristics of the touch may be used to generate a set of parameters related to the touch. In an embodiment, firmware is used to determine a reliability value for the touch. The reliability value and the location of the touch is provided to a software module. The software module uses the reliability value and an activity context to determine a confidence level of the touch. In an embodiment, the confidence level may include an evaluation of changes in the reliability value over time. If the confidence level for the touch is too low, it may be rejected.

US2004/0218104 relates to a user interface for multimedia centers which utilizes handheld inertial-sensing user input devices to select channels and quickly navigate the dense menus of options. Extensive use of the high resolution and bandwidth of such user input devices is combined with strategies to avoid unintentional inputs and with dense and intuitive interactive graphical displays.

SUMMARY

The invention is defined by the appended independent claims. Additional features and advantages of the concepts disclosed herein are set forth in the description which follows, and in part will be obvious from the description, or may be learned by practice of the described technologies. The features and advantages of the concepts may be realized and obtained by means of the instruments and combinations particularly pointed out in the appended claims. These and other features of the described technologies will become more fully apparent from the following description and appended claims, or may be learned by the practice of the disclosed concepts as set forth herein.

R. 42(1)(c)

Disclosure of invention

The present disclosure describes methods and arrangements for remotely controlling a device with generic touch screen data by displaying a Graphical User Interface (GUI) comprising a plurality of contexts on a display device for controlling at least some functions of a device. Data characterizing a touch event can be received and interpreted or translated into a list of available commands. These commands can effect an action by the device in response to the touch data as interpreted in view of the context of the GUI.

The present disclosure further includes a computer-readable medium for storing program code effective to cause a device to perform at least the steps of the method discussed above, and throughout this description.

In some instances the same touch event can affect the same action in multiple GUI contexts, but in other instances, the same touch can affect different actions in different interface contexts.

Any portable electronic device is useful to act as the remote including a cell phone, smart phone, PDA or portable media player.

Likewise any number of devices can be controlled by the remote include a multimedia management program and a player or a digital video recorder or a television-tuning device such as a television, or cable box.

Also disclosed is a multiple function device running an application causing the multiple function device to act a remote control. A touch screen device displaying a graphical user interface comprising an unstructured touch sensitive area is provided. The touch screen can be configured to receive a variety of inputs in the form of touch gestures in the unstructured touch sensitive area and to interpret the gestures into electronic signals, the unstructured touch sensitive area comprising at least a region having no individually selectable features. The device also comprises a processor for receiving the electronic signals from the touch sensitive area and translating the signals into at least position, movement, and durational parameters. Additionally, a communications interface is provided for receiving the parameters from the processor and sending the parameters to a device to be remotely controlled for interpretation. The communications interface can use at least a protocol that can be used in a substantially unidirectional fashion, to send the parameters to the remotely controlled device, whereby no input confirmation is received on the remote control device.

In some embodiments a method of remotely controlling an application, selected from a plurality of possible applications on the same or a plurality of different remote devices is disclosed. Data representative of touch inputs can be received into an unstructured portion of a touch screen interface. The data can be received by a remotely controlled device or device hosting a remotely controlled application and interpreted into a set of available events recognizable by the remotely controlled device or application. Based on a set of available events a GUI associated with a context can be updated. The update can be in response to a selection of an event from the set of available events recognizable by the remotely controlled application that is selected based on the context associated with the displayed GUI.

BRIEF DESCRIPTION OF THE DRAWINGS

In order to best describe the manner in which the above-described embodiments are implemented, as well as define other advantages and features of the disclosure, a more particular description is provided below and is illustrated in the appended drawings. Understanding that these drawings depict only exemplary embodiments of the invention and are not therefore to be considered to be limiting in scope, the examples will be described and explained with additional specificity and detail through the use of the accompanying drawings in which:

R. 42(1)(d)

Brief description of drawings

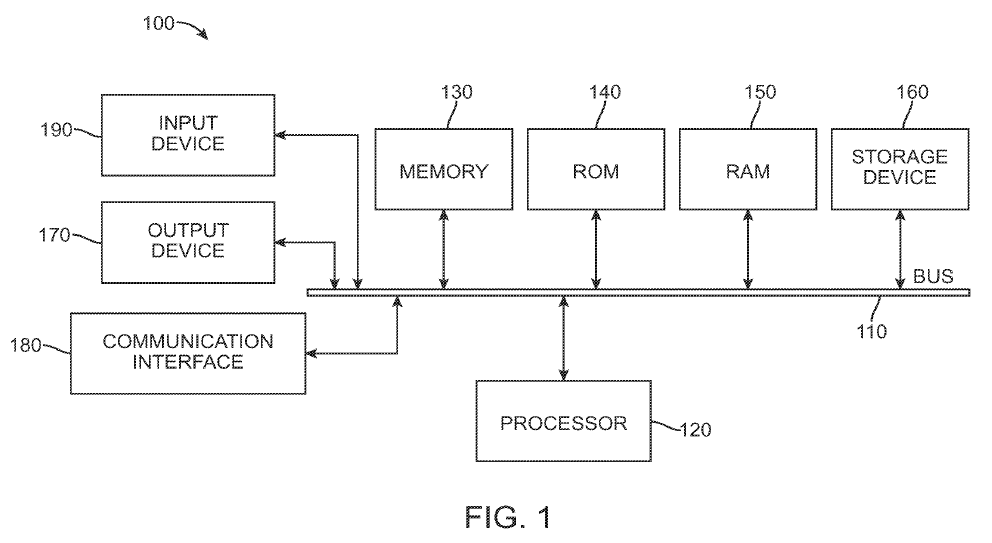

FIG. 1 illustrates an example computing device;

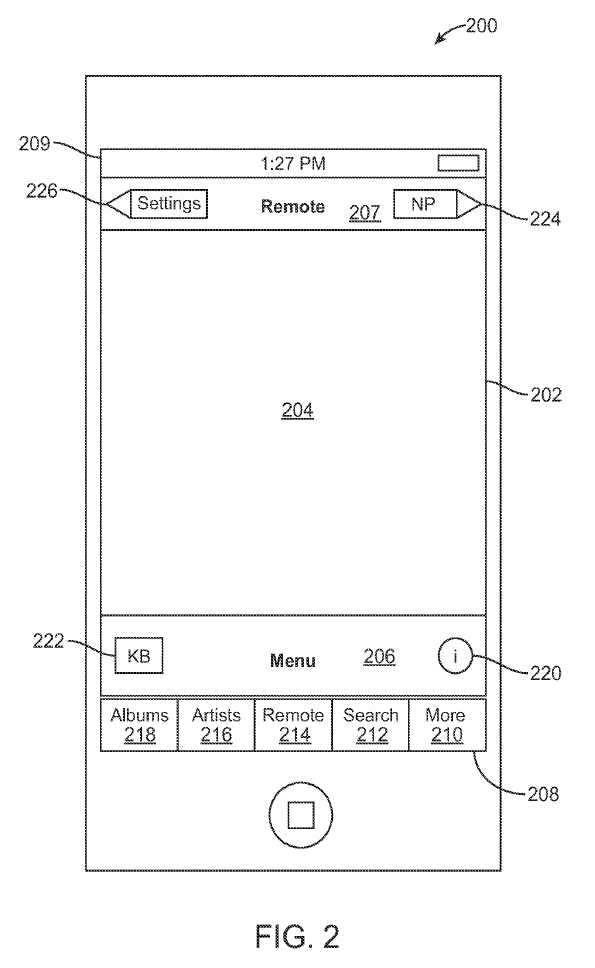

FIG. 2 illustrates an example interface embodiment for a remote control device;

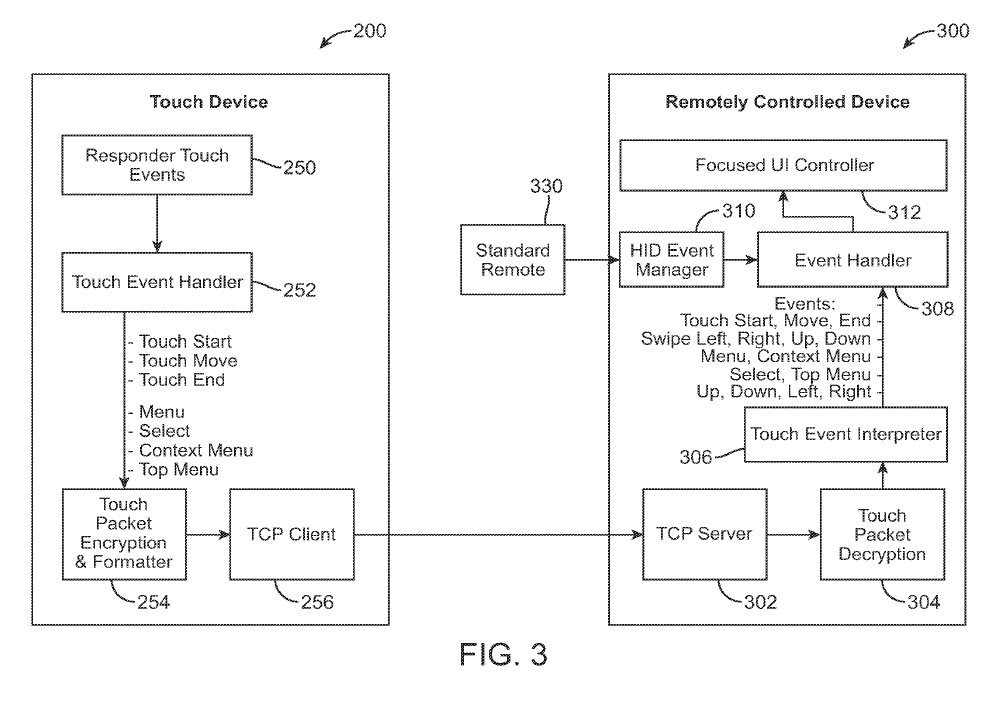

FIG. 3 illustrates an example functional diagram system embodiment;

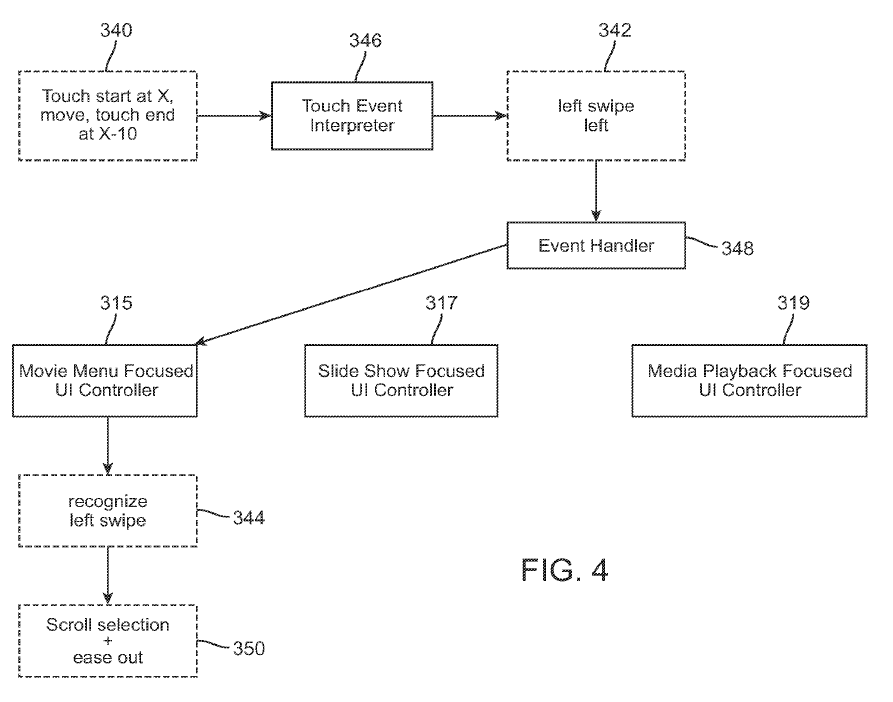

FIG. 4 illustrates an example flow diagram of a focused UI controller function embodiment;

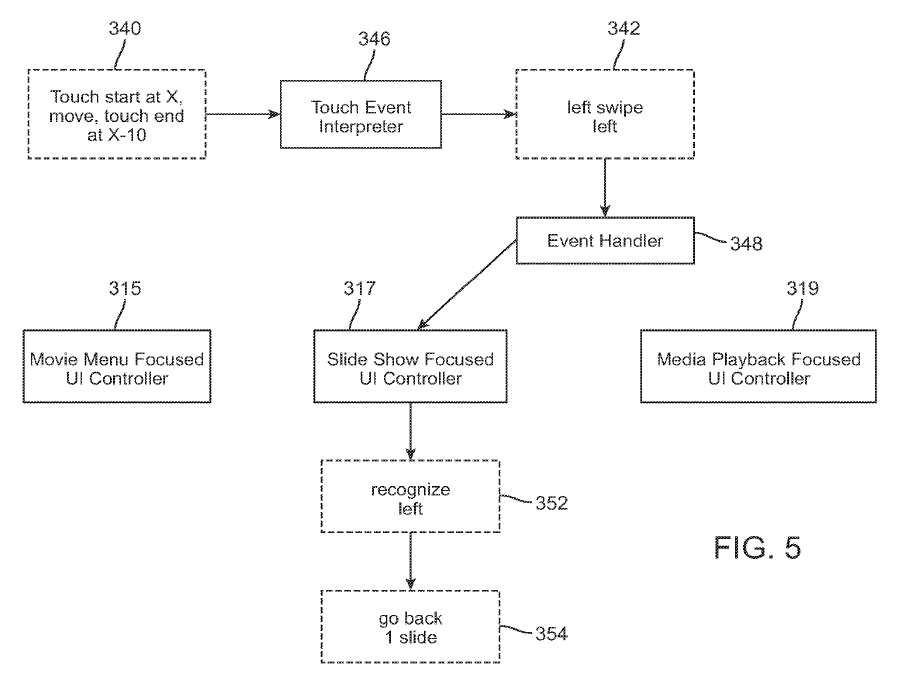

FIG. 5 illustrates an example flow diagram of a focused UI controller function embodiment;

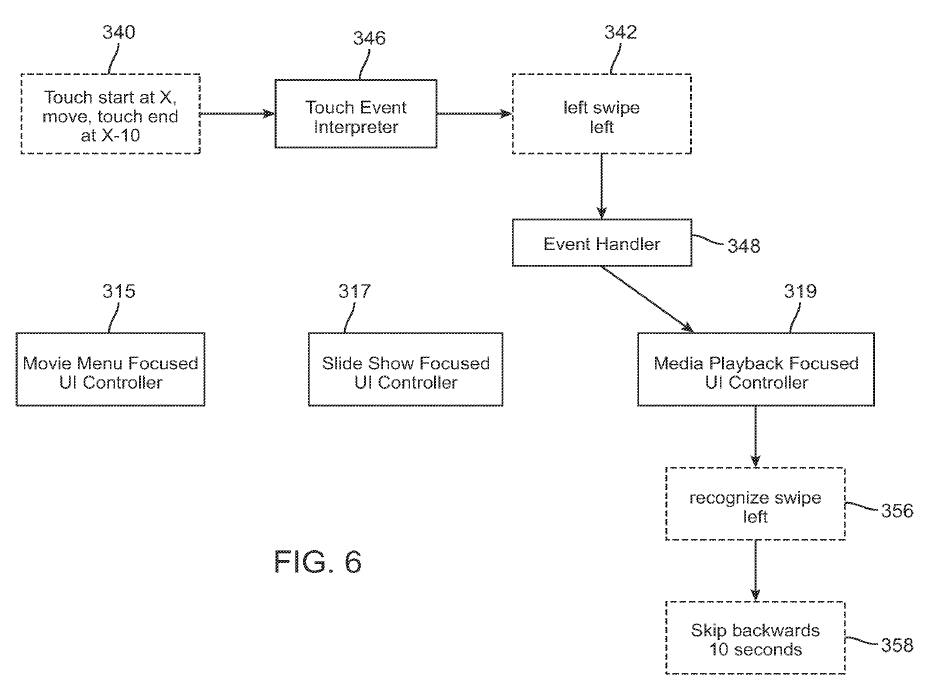

FIG. 6 illustrates an example flow diagram of a focused UI controller function embodiment;

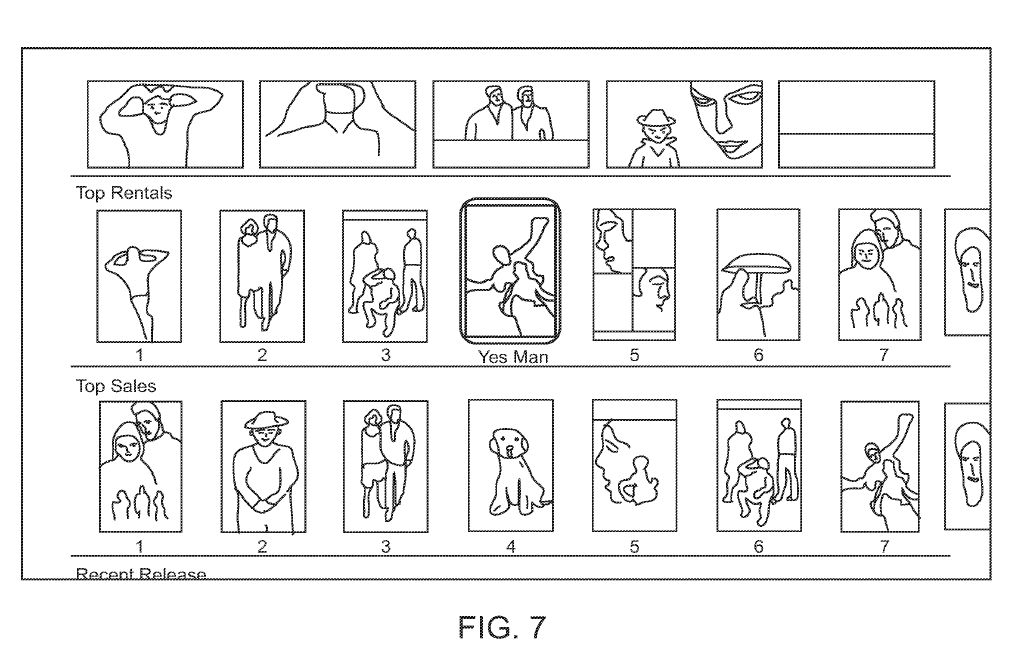

FIG. 7 illustrates an example menu graphical user interface embodiment;

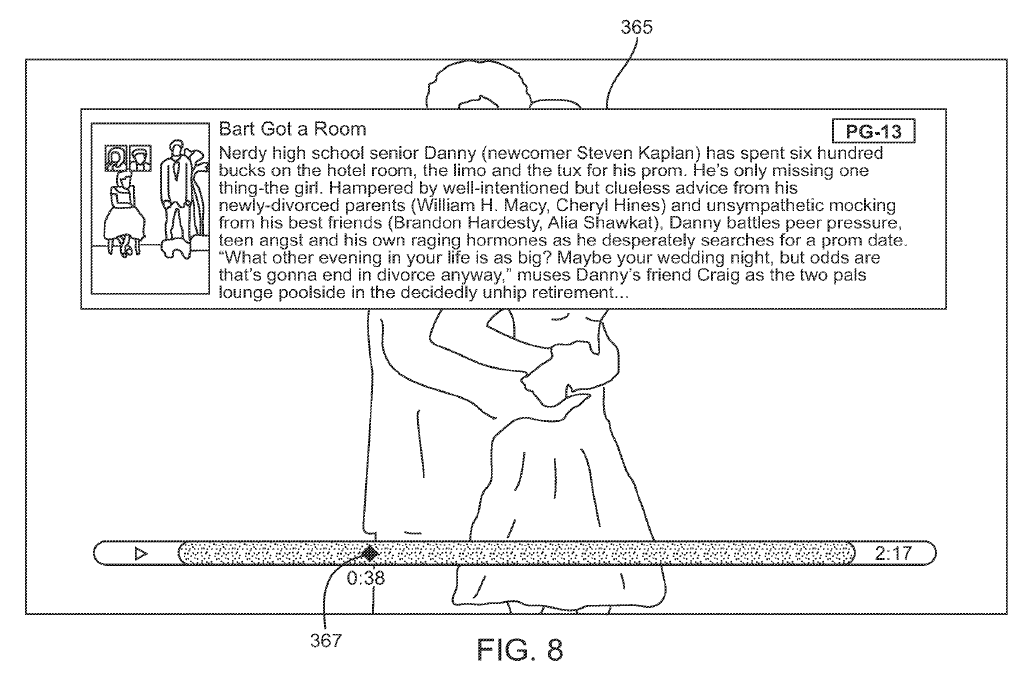

FIG. 8 illustrates an example media playback graphical user interface embodiment;

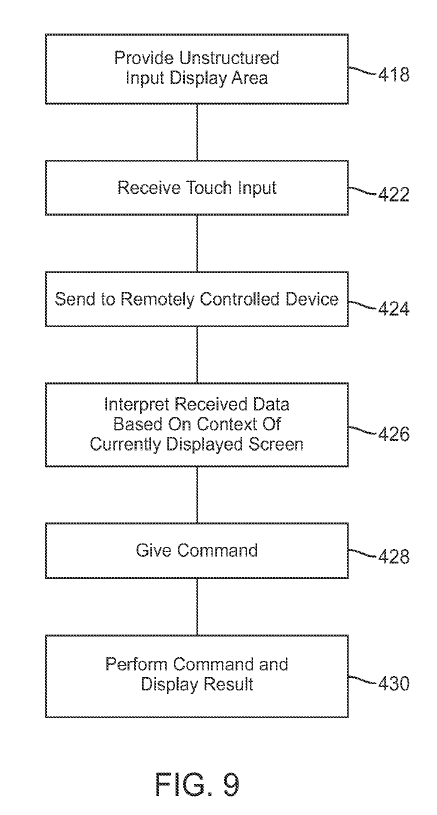

FIG. 9 illustrates an example method embodiment; and

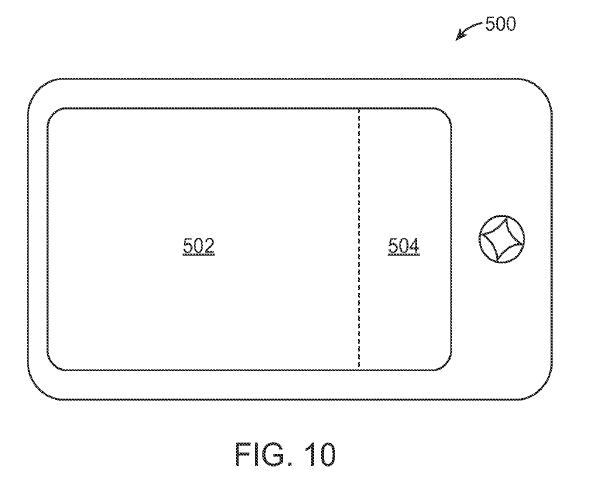

FIG. 10 illustrates an example interface embodiment for a remote control device.

DETAILED DESCRIPTION

Various embodiments of the disclosed methods and arrangements are discussed in detail below. While specific implementations are discussed, it should be understood that this is done for illustration purposes only. A person skilled in the relevant art will recognize that other components, configurations, and steps may be used without parting from the spirit and scope of the disclosure.

R. 42(1)(e)

Description of at least one way of carrying out the invention

With reference to FIG. 1, a general-purpose computing device 100 which can be portable or stationary is shown.

The general-purpose computing device can be suitable for carrying out the described embodiments or in some embodiments two or more general-purpose computing devices can communicate with each other to carry out the embodiments described below. The general purpose computing device 100 is shown including a processing unit (CPU) 120 and a system bus 110 that couples various system components including the system memory such as read only memory (ROM) 140 and random access memory (RAM) 150 to the processing unit 120. Other system memory 130 may be available for use as well. It can be appreciated that the system may operate on a computing device with more than one CPU 120 or on a group or cluster of computing devices networked together to provide greater processing capability. The system bus 110 may be any of several types of bus structures including a memory bus or memory controller, a peripheral bus, and a local bus using any of a variety of bus architectures. A basic input/output (BIOS) stored in ROM 140 or the like, may provide the basic routine that helps to transfer information between elements within the computing device 100, such as during start-up. The computing device 100 further includes storage devices such as a hard disk drive 160, a magnetic disk drive, an optical disk drive, tape drive or the like. The storage device 160 is connected to the system bus 110 by a drive interface. The drives and the associated computer readable media provide nonvolatile storage of computer readable instructions, data structures, program modules and other data for the computing device 100. In one aspect, a hardware module that performs a particular function includes the software component stored in a tangible computer-readable medium in connection with the necessary hardware components, such as the CPU, bus, display, and so forth, to carry out the function. The basic components are known to those of skill in the art and appropriate variations are contemplated depending on the type of device, such as whether the device is a small, handheld computing device, a desktop computer, or a large computer server.

Although the exemplary environment described herein employs a hard disk, it should be appreciated by those skilled in the art that other types of computer readable media which can store data that is accessible by a computer, such as magnetic cassettes, flash memory cards, digital versatile disks, cartridges, random access memories (RAMs), read only memory (ROM) may also be used in the exemplary operating environment.

To enable user interaction with the computing device 100, an input device 190 represents any number of input mechanisms, such as a microphone for speech, a touch-sensitive screen for gesture or graphical input, keyboard, mouse, motion input, speech and so forth. The device output 170 can also be one or more of a number of output mechanisms known to those of skill in the art. For example, video output or audio output devices which can be connected to or can include displays or speakers are common. Additionally, the video output and audio output devices can also include specialized processors for enhanced performance of these specialized functions. In some instances, multimodal systems enable a user to provide multiple types of input to communicate with the computing device 100. The communications interface 180 generally governs and manages the user input and system output. There is no restriction on the disclosed methods and devices operating on any particular hardware arrangement and therefore the basic features may easily be substituted for improved hardware or firmware arrangements as they are developed.

For clarity of explanation, the illustrative system embodiment is presented as comprising individual functional blocks (including functional blocks labeled as a "processor"). The functions these blocks represent may be provided through the use of either shared or dedicated hardware, including, but not limited to, hardware capable of executing software. For example the functions of one or more processors presented in FIG. 1 may be provided by a single shared processor or multiple processors. (Use of the term "processor" should not be construed to refer exclusively to hardware capable of executing software.) Illustrative embodiments may comprise microprocessor and/or digital signal processor (DSP) hardware, read-only memory (ROM) for storing software performing the operations discussed below, and random access memory (RAM) for storing results. Very large scale integration (VLSI) hardware embodiments, as well as custom VLSI circuitry in combination with a general purpose DSP circuit, may also be provided.

The logical operations of the various embodiments can be implemented as: (1) a sequence of computer implemented steps, operations, or procedures running on a programmable circuit within a general use computer, (2) a sequence of computer implemented steps, operations, or procedures running on a specific-use programmable circuit; and/or (3) interconnected machine modules or program engines within the programmable circuits.

The present system and method is particularly useful for remotely controlling a device having one or more menus via a remote touch interface having at least an unstructured primary input area. A user can provide inputs to a touch interface without needing to view the interface and yet still achieve the desired response from the remotely controlled device. The primary input area of the touch interface may or may not have a background display, such as on a typical touch screen, but the primary input area of the touch interface should be unstructured. In other words, in preferred embodiments, the primary input area of the touch interface should not have independently selectable items, buttons, icons or anything of the like. Since the touch interface is unstructured, the user does not have to identify any selectable buttons. Instead the user can input a gesture into the interface and watch the device respond. In some embodiments, the system does not provide any other visual confirmation.

FIG. 2 illustrates an example remote embodiment. A remote 200 is shown running an application or other software routine providing the interface. While in the displayed embodiment, remote 200 is shown having a touch screen interface 202, the interface could be any touch sensitive interface, such as, for example, a capacitive sensing touch pad. Further, the remote device itself can be a dedicated remote device or a portable electronic device having other functions such as a smart phone or portable music playing device or a PDA.

The touch sensitive interface 202 comprises a primary touch sensitive area 204, which can receive the majority of the user touch inputs. In the displayed embodiment, the touch sensitive interface also comprises other touch sensitive areas including a menu area 206, a navigation bar 207, a tab bar 208, and a status bar 209.

The primary touch sensitive area 204 is an unstructured area, having no individually selectable items such as buttons or icons. Further, since area 204 is unstructured, there are no selectable items to select or avoid and therefore the primary touch sensitive area is especially conducive to providing input to the remote without needing to view the remote itself. Instead a user can view the remotely controlled device for feedback.

Starting with the navigation bar 207, two independently selectable buttons are displayed. Back button 226, shown here labeled "Settings" because in this example illustration a user can return to the settings menu, can be selected to return the user to the previous screen. The button's label can change to display the name of the screen that the user will be returned to if the button is selected. Button 224 acts as a forward button, but in most cases takes the user to a "Now Playing Screen" (abbreviated as NP) which is present when the remotely controlled device is playing audio or video. It should be appreciated here and throughout the document that that while many of the buttons in the remote interface as indicated as having a specific name, the labeling is not intended to be limiting.

The entire menu area 206 is a touch sensitive area which records inputs to return to the previous menu by receiving taps in the menu area, or to return to the top-level-menu by receiving and detecting a press and hold action. In the illustrated embodiment two other buttons are present. Keyboard button 222 and information button 220 can be displayed when they are needed and not displayed when they are not needed. For example, when a keyboard is present on the user interface of the remotely controlled device, the keyboard button 222 can appear. Selecting the keyboard button 222 can cause a keyboard to appear on the remote interface for easier typing into the remotely controlled device's interface. Similarly, information button 220 can be displayed when an item is displayed on the remotely controlled device's interface for which information is available.

Buttons 220 and 222 are located near the outer edges of the menu area 206 and of the screen 202 in general. Locating buttons 220 and 222 in the corner of the interface helps avoid accidental selections, as it is expected that a user would rarely hit one of the corners of the device accidently.

In some embodiments, the remote device 200 can be a mobile phone, smart phone, portable multimedia player, PDA or other portable computing device capable of a diverse set of functions. In these embodiments a tab bar 208 can be useful to navigate between other functions on the remote device. The tab bar 208 can be a structured touch sensitive area with selectable buttons for this purpose. For example button 218 can instruct the remote device 200 to switch to the albums menu, or button 216, the artists menu, button 212, a search screen. Button 210 can provide additional options. Remote button 214 can return the remote device back to the remote interface.

In some embodiments one or all of areas 206, 207, 208, and 209 may not be present as part of the remote interfaces. In some other embodiments area 206, 207, 208, and 209 can ignore inputs in various contexts so as to avoid accidental selection. For example, when the remote device is held in a substantially horizontal fashion, all individually selectable inputs can be made unselectable, and in complimentary fashion, when the device is held at a substantially vertical angle, the individually selectable inputs can be active to be selected.

In some embodiments, one method to prevent accidental selection requires a selection action to both start and end within the individually selectable area. For example, to activate the menu area, the touch must start and end within the menu area 206. If a touch were to either begin or end outside of the menu area but in the primary touch sensitive area 204, then the input can be considered to be an input of the type usually given and detected in the primary touch sensitive area 204.

As illustrated in FIG. 3 touch device 200 receives touch events from the user as they are input using a Responder Touch Events Module 250 which passes the touch events to a Touch Event Handler 252. Touch Event Handler 252 interprets the touch events received by the Responder Touch Events Module 250 to identify information about the touch event. For example Touch Event Handler can identify the pixel location of the touch start, the pixel of the touch end, and identify touch movement. It also interprets the duration of the touch event. The Touch Event Handler 252 can interpret whether the touch event resulted in a menu input, a selection action, context menu input or top menu input. This information is formatted into packets and encrypted by the Touch Packer Encryption and Formatter module 254 and sent by the TCP Client 256 to the remotely controlled device 300.

While FIG. 3 illustrates a TCP Client 256 for transferring data from the remote 200 to the remotely controlled device 300, any protocol can be used to send data from device 200 to 300. However, in this embodiment TCP is discussed for speed benefits. TCP can be configured work in a substantially unidirectional fashion without requiring handshakes or other unnecessary communication that can increase latency in the transmission of data from the remote to the remotely controlled device. It will be appreciated that whatever technology, whether a direct device-to-device connection or over a local area network is chosen, it should allow relatively fast and reliable delivery of the information sent by the touch device 200.

Additionally, for purposes of speedy transmission of commands from the touch device 200 to the remotely controlled device 300 the amount of data sent should be kept to minimum. In some embodiments the amount of data transferred comprises around 20 bytes up to about 50 bytes per packet. While it can be appreciated that the touch interface of the touch device 200 is a versatile instrument and is capable of recording and interpreting data into more complex instructions for the remote device, complexity is not beneficial. Instead simple information is recorded and transmitted by touch device 200.

In the remote context it is important that the commands from the remote 200 are transmitted and received by the remotely controlled device 300 quickly. Therefore, in embodiment illustrated FIG. 3, the remote sends largely generic touch device data and leaves the interpretation of the data to the remotely controlled device 300. For example, TCP server 302 receives the TCP data transmission from a TCP client 256 and the data is decrypted with module 304.

The generic touch data, (touch start, move, end, time/ velocity) can be interpreted by an interpreter such as Touch Event Interpreter 306 that interprets the generic touch event data into events that can be understood and used by the remotely controlled device 300. In this example, the information such as touch start, move and end that was recorded by the remote 200 can be interpreted into events, left, right, up, down, swipe left, swipe right, swipe up, swipe down or interpreted in a generic way as touch start, move or end.

Also illustrated is an Event Handler 308, which can receive inputs and pass them onto a controller for a graphical user interface. As illustrated, the Event Handler 308 can receive events originating from a standard remote control 330 that have been received and interpreted by the Human Interface Event Manager 310, and can also receive events that originate from the touch device 200 that have been interpreted by the Touch Event Interpreter 306.

One or more UI controllers control each graphical user interface of the remotely controlled device. As illustrated in FIG. 3, the UI controller is labeled as a Focused UI controller 312 because it represents the UI controller that is associated with the GUI that is currently being displayed by the remotely controlled device. While there can be many UI controllers, the focused UI controller is the controller that is associated with the GUI screen currently displayed.

The focused UI controller 312 receives events from the event handler 308. The focused UI controller 312 receives all of the possible inputs and reacts to whichever input the focused UI controller is configured to accept.

FIGS. 4-6 illustrate how different focused UI controllers handle the same set of touch events. At 340 a user enters a gesture that begins at an arbitrary point X on the touch interface and ends a point X-10, which is left of X and the touch gesture has some velocity. The touch input is received, processed, and sent to the remotely controlled device as described above. At the remotely controlled device, the touch event interpreter 346 receives the data descriptive of the touch input and can interpret the data into events 342 that can be recognized by the remotely controlled device. In this example, the touch event interpreter outputs a swipe left event and a left event.

The event handler 348 passes the left event and swipe left event to the UI controller that is currently "in focus." A UI controller is said to be "in focus" when it is controlling at least a portion of the currently displayed user interface screen. The focused UI controller receives the events from the event handler 348. In FIGs. 4-6, three different focused UI controllers exist. A movie menu focused UI controller 315, a slide show focused UI controller 317, and a media playback focused UI controller 319. In FIG. 4, the movie menu focused UI controller 315 receives the touch events 342 from the event handler 348 and recognizes the left swipe event at 344 and causes the UI to appear to scroll through the selections and ease out of the scroll operation at 350. In FIG. 5, the slide show focused controller 317 is the active controller and it recognizes the left event at 352, which results in the user interface returning to the previous slide in the slide show at 354. In FIG. 6, the media playback focused UI controller 319 is the active controller and it recognizes the swipe left event at 356, which results in the user interface returning to the previous slide in the slide show at 358. Together FIGs. 4-6 illustrate how the same touch input can be recognized as several different events and the focused UI controllers choose from the events to cause an action in the user interface.

While in the examples illustrated in FIGs. 4-6, the focused UI controllers only recognized one of the two input events, it can be possible that the focused UI controller can recognize multiple events as being tied to actions. For example, the movie menu controller could have recognized both the swipe event and the left event, but in such cases, the focused UI controller can be configured to choose one event over the other if multiple events are recognized.

At this point it is informative to compare how the currently described technology works in comparison to a standard remote 330 in FIG. 3. One way to conceptualize the remote 200 is as a universal remote that can output the same data regardless of the device it is communicating with. It is a remote that has an unstructured interface and therefore any gesture or command can be input. In contrast, remote 330 is specific to the remotely controlled device. For the purposes of this discussion, the remote is a simple remote having only a menu button, up, down, left and right. The remotely controlled device is configured to accept inputs from remote 330 and each input is tied to a function in the remotely controlled device. Even a traditional universal remote is programmed to work in the same matter as remote 330 outputting commands that are specific to the remotely controlled device.

Inputs from the touch device 200 are received by the touch event interpreter 306 which can interpret the touch data into touch events that can potentially be used by the remote device. The event handler 308 forwards the events to the focused UI controller 312. An input from the standard remote 330 can be received by the human interface device event manager 310 and interpreted into an event that can be used by the touch device. Just as with the events received from the touch device 200, the event handler 308 can forward the command from the standard remote 330 to the focused UI controller 312.

In some embodiments the focused UI controller 312 can be configured to accept additional inputs beyond that which is possible using the standard remote 330. In such embodiments, the focused UI controller 312 can choose from among the inputs that it wants to accept. In these embodiments the focused UI controlled 312 is configured to make this choice. For example the focused UI controller 312 can be informed that it is receiving events from the touch device 200 and consider those commands more preferable than the simplistic inputs, such as a left event, given by the standard remote 330. In such a case, if the focused UI controller 312 were to receive events from the touch device 200 it would need to choose from the simple event or the higher-level event, such as a swipe, since both are represented. The focused UI controller 312 can learn that the data is from the touch device 200 and choose to a fast forward a movie based on the swipe input as opposed to skipping a movie chapter based on a left event.

Just as the focused UI controller 312 can be configured to accept different commands based on which device is receiving the commands it can also interpret the commands based on the context of the interface that is currently displayed. As mentioned above, each focused UI controller is specific to a different context. There can be many more UI controllers each responsible for their own function. Since each UI controller is responsible for a different part of the UI or different screen having a different context, each focused UI controller can perform different functions given the same input.

As discussed above, a user can provide a variety of inputs into the primary touch sensitive area 204, but the result of the inputs can vary depending on the context in which the input is given. The focused UI controller that is specific to a particular GUI can be programmed to interpret inputs based on elements or characteristics of its context. For example in the case of a remote controlling a multimedia application running on a remote device, there can be at least a menu context (FIG. 7) and a media playback context (FIG. 8) each having their own focused UI controller governing their behavior. While controlling an multimedia application remotely is discussed as one exemplary embodiment, many other devices can be controlled according to the concepts discussed herein including, but not limited to, televisions, cable boxes, digital video records, digital disc players (DVD, CD, HD-DVD, blu ray etc.).

Returning to the example of a multimedia application running on a remote device - in a menu context, a user can potentially browse media, by title, artist, media type, playlists, album name, genre (it should be understood that some of these categories for browsing media are more or less applicable to different media types such as movies or songs). In FIG. 7, a user can browse the menu interface by movie titles arranged in a list. In the menu context various inputs can be entered into the primary touch sensitive area to navigate the menu and make a selection. For example, and as shown in the table below, a user can swipe the interface in a desired direction, which can result in a scroll operation. As is known in the art, the speed of the gesture and the scrolling operation can be related. For example, a faster swipe gesture can result in a faster scroll operation and/or longer scroll duration.

Additionally the primary touch sensitive area can also receive tap gestures, which can select an item. Further, dragging a user's finger across the primary touch sensitive area 204 can move a selected or highlighted item.

TABLE 1

Menu Navigation Context |

|

Touch Action |

Result |

Single digit drag in the desired direction |

Move selection |

Single digit swipe in the desired direction |

Scroll selection and ease out |

Single digit tap in main selection area |

Select item |

Many of the same actions can result in different outcomes or actions performed on or by the remotely controlled device in other contexts. For example, and as seen in the chart below, some of the same actions described above cause different results in the media playback context which is illustrated in FIG. 8. A single digit tap in the primary touch sensitive area can cause a media file to play or pause in the playback context as opposed to a selection in the menu context. Similarly, a drag action can result in a shuttle transport operation of shuttle 367. Swipes right and left can result in a fast forward right and left respectively as opposed to the scroll operation in the menu context. An upward swipe can cause an information display 365 to cycle through various information that a media player might have available to show. A downward swipe can show a chapter selection menu.

TABLE 2

Media Playback Context |

|

Touch Action |

Result |

Single digit tap in the selection area |

Toggle Play/Pause |

Single digit drag left/right |

Shuttle transport to left/right |

Swipe digit swipe left |

Skip backwards 10 seconds |

Swipe digit swipe right |

Skip forwards 10 seconds |

Swipe digit swipe up |

Cycle Info Display |

Swipe digit swipe down |

Show Chapter selection Menu |

Other inputs do not need to vary by context. For example, a tap in the menu area 206 returns to the previous menu screen. Holding a finger in the menu area returns to the top menu. Also some inputs into the primary touch sensitive area 204 will always result in the same action - a two-digit press and hold in the primary touch sensitive area 204 will return to the menu for the context that the device is currently displaying.

TABLE 3

Any Context |

|

Touch Action |

Result |

Single digit tap in menu area |

Menu |

Single digit Press & hold in menu area |

Top Menu |

Two digit press & hold in main selection area |

Context Menu |

In addition to the various touch inputs described above many more inputs are also possible. Nothing in the above discussion should be seen as limiting the available touch inputs or gestures that can be used with the described technology. For example, in additional to the one or two finger inputs described above, three or four finger inputs are also contemplated. Additionally, more complex gestures such as separating two or more fingers and providing inputs relative to each finger can also be useful. Such gestures are already known in the art such as rotating one finger around the other to rotate an onscreen image, or moving two fingers away from each other or towards each other can result in a zoom out or in operation. Many others are considered within the level of skill in the art.

Furthermore, while the term digit is referred to above and throughout the specification it is also not meant to be limiting. While is some embodiments a digit refers to a finger of a human hand, in some embodiments digit can refer to anything that is capable of being sensed by a capacitive device. In some embodiments, digit can also refer to a stylus or other object for inputting into a display-input device.

After the focused UI controller accepts an input it affects the UI of the remotely controlled device. In many cases this may be the first feedback that the user will receive that the proper command was given. Such a feedback loop increases the responsiveness of the system and allows the remote interface to be less complicated. However, in other embodiments, other types of feedback can be supplied. For example, audible feedback can be supplied to the user so she can at least know a command was issued. Alternatively the remote can vibrate or provide any other desirable type of feedback. The feedback can also be command specific, a different sound or vibration for each command is possible.

In some embodiments, the remotely controlled device may not have an event interpreter. In these embodiments, the remote would send the data representative of touch inputs to the remotely controlled device and the focused UI controller can be configured to interpret the data.

In some embodiments it can be useful to allow the remote to learn about changing menus or displays of the remote device. In such embodiments, a separate communications channel can be opened, for example using a hypertext transfer protocol for transferring information between the devices. To maintain remote performance most communications can be single direction communications for faster speed, but bidirectional communications can be used when needed. For example, even though most of the communications from the remote to the remotely controlled device are transferred using TCP, HTTP or DAAP can be used to inform the remote of special cases such as when additional information is available or that a keyboard is being displayed on the remotely controlled device. In such instances, a keyboard for example can be displayed on the remote and inputs into the keyboard can be transferred using the HTTP protocol. Similarly, when information such as that shown in FIG. 8 is available, and information button can be displayed on the remote to bring up information window 365.

FIG. 9 illustrates the method for controlling the remote device. In step 418, a remote application provides an unstructured touch sensitive area for receiving touch inputs from a user. The touch inputs are received and interpreted into at least touch start, touch end, move and duration data in step 422. The data is formatted and sent to the remotely controlled device in step 424.

In step 426, the remotely controlled device interprets the received data with knowledge of the context of the screen that is currently being displayed by the remotely controlled device. Depending on the inputs received and the context of the current display, the remotely controlled device interprets the data received from the remote and accepts the command in step 428 which causes the remotely controlled device to perform the command and display the result in step 430.

It will be appreciated that while the above description uses a media playback device with associated software, the technology is equally applicable to other remotely controlled devices such as televisions, DVR, DVD players, Bluray, cable boxes etc. For example, either the remotely controlled device can be programmed to accept and interpret generic touch screen interface data and respond to those inputs. Alternatively, the remote itself can be provided with instructions on how to communicate with almost any remotely controlled device. As long as either the remote or the remotely controlled device can interpret the touch data based on the context of what is currently being displayed or caused to be displayed by the remote device, the principles of the described technology can apply.

In some embodiments less or more touch sensitive areas are possible. However, additional touch sensitive areas having structured interfaces increase the possibility of accidental commands being input into the remote. The best interfaces provide an overall user experience wherein the user does not need to look at the remote to make a selection in most instances. However, it will be appreciated that if the user is not looking at the remote, in a touch environment wherein the entire device feels similar to the user, accidental inputs are possible and may even become likely if too many individually selectable items are available.

Several methods can be used to prevent accidental inputs. One of which, as described above, uses an accelerometer device to determine the devices orientation and based on that orientation determine what type of command the user is trying to enter. If the device is horizontal the user is probably not looking at the device and gestures are likely intended to control the remotely controlled device. However, if the device is more vertical, or angled so that the user can view the screen, the user is probably viewing the screen and inputs are more likely into the structured interface buttons. Another alternative is illustrated in FIG. 5 wherein the remote device 500 is rotated in a landscape orientation. In this orientation, the entire interface is unstructured and only the primary selection area 502 and the menu selection area 504 are present. This embodiment would eliminate accidental inputs by removing the structured portion of the interface when the user desired to only use the unstructured interface to enter touch gestures to control the remotely controlled device. When the device is rotated into the portrait orientation a structured interface can become available. Still yet another way to reduce unwanted inputs is to turn the display portion of the touch screen completely off regardless of the orientation of the device. In these embodiments only unstructured input into the primary selection area and menu area would be available. If the user wanted one of the structured options the screen could be turned back on by some actuation mechanism like a hardware button, shaking the device, or a touch gesture.

Additionally, and as described above, accidental inputs can be avoided by configuring the touch interface to accept any input that drifts into or out of a structured input as a input into the unstructured interface. In this way, only deliberate selections are registered.

Embodiments within the scope of the present invention may also include computer-readable media for carrying or having computer-executable instructions or data structures stored thereon. Such computer-readable media can be any available media that can be accessed by a general purpose or special purpose computer. By way of example, and not limitation, such tangible computer-readable media can comprise RAM, ROM, EEPROM, CD-ROM or other optical disk storage, magnetic disk storage or other magnetic storage devices, or any other medium which can be used to carry or store desired program code means in the form of computer-executable instructions or data structures. When information is transferred or provided over a network or another communications connection (either hardwired, wireless, or combination thereof) to a computer, the computer properly views the connection as a computer-readable medium. Thus, any such connection is properly termed a computer-readable medium. Combinations of the above should also be included within the scope of the tangible computer-readable media.

Computer-executable instructions include, for example, instructions and data which cause a general purpose computer, special purpose computer, or special purpose processing device to perform a certain function or group of functions. Computer-executable instructions also include program modules that are executed by computers in standalone or network environments. Generally, program modules include routines, programs, objects, components, and data structures that perform particular tasks or implement particular abstract data types. Computer executable instructions, associated data structures, and program modules represent examples of the program code means for executing steps of the methods disclosed herein. The particular sequence of such executable instructions or associated data structures represent examples of corresponding acts for implementing the functions described in such steps.

Those of skill in the art will appreciate that other embodiments of the invention may be practiced in network computing environments with many types of computer system configurations, including personal computers, hand-held devices, multi-processor systems, microprocessor-based or programmable consumer electronics, network PCs, minicomputers, mainframe computers, and the like. Embodiments may also be practiced in distributed computing environments where tasks are performed by local and remote processing devices that are linked (either by hardwired links, wireless links, or by a combination thereof) through a communications network. In a distributed computing environment, program modules may be located in both local and remote memory storage devices.

Communication at various stages of the described system can be performed through a local area network, a token ring network, the Internet, a corporate intranet, 802.11 series wireless signals, fiber-optic network, radio or microwave transmission, etc. Although the underlying communication technology may change, the fundamental principles described herein are still applicable.

The various embodiments described above are provided by way of illustration only and should not be construed to limit the invention. For example, the principles herein may be applied to any remotely controlled device. Further, those of skill in the art will recognize that communication between the remote the remotely controlled device need not be limited to communication over a local area network but can include communication over infrared channels, Bluetooth or any other suitable communication interface. Those skilled in the art will readily recognize various modifications and changes that may be made to the present invention without following the example embodiments and applications illustrated and described herein, and without departing from the scope of the present disclosure.

1. A method for remotely controlling a device (300), comprising:

R. 43(1)(a)

Prior art portion of independent claim wherever appropriate

receiving touch input data at a remotely controlled device (300) from a remote controller (200) comprising a touch screen (202) and a processing unit, the touch input data comprising information including positional, movement and durational input parameters, wherein the touch input data can be interpreted by the remotely controlled device (300) as one of a plurality of potential commands in a first context of a graphical user interface (GUI) of the remotely controlled device (300) and as another of the plurality of potential commands in a second context of the GUI, interpreting the touch input data in combination with a current context of the GUI to determine an appropriate command for the current context of the GUI; and

updating the GUI in response to the appropriate command, characterised in that the method further comprises sending from the remotely controlled device (300) information via a bidirectional communication protocol to the remote controller (200) informing the remote controller to display a keyboard on the touch screen, at least partially in response to the information received via the bidirectional communication protocol.

2. A system comprising:

a remote controller (200) comprising:

a touch screen (202) configured to receive touch input,

a processing unit for receiving the electronic signals from the touch screen and translating the signals into touch input data, and

a communication interface configured for:

sending the touch input data to a remotely controlled device (300), the data including at least positional, movement and durational input parameters, and

receiving information from the remotely controlled device (300) via a bidirectional communication protocol informing the remote controller to display a keyboard, wherein the remote controller is configured to display a keyboard on the touch screen, at least partially in response to the information received via the bidirectional communication protocol,

a remotely controlled device (300) configured to present a graphical user interface (GUI) in a plurality of contexts,

the remotely controlled device comprising:

a display for displaying a graphical user interface (GUI),

a communication interface configured for:

receiving the touch input data, wherein the touch input data can be interpreted by the remotely controlled device (300) as one of a plurality of potential commands in a first context of a graphical user interface (GUI) of the remotely controlled device (300) and as another of the plurality of potential commands in a second context of the GUI, and

a processing unit configured for interpreting the touch input data in combination with a current context of the GUI to determine an appropriate command for the current context, and updating the GUI in response to the appropriate command,

characterized in that

the communication interface is further configured for:

sending information via a bidirectional communication protocol informing the remote controller to display a keyboard on the touch screen at least partially in response to the information received via the bidirectional communication protocol.

3. A device configured with an application causing the device to function as a remote controller (200) for a remotely controlled device, comprising:

a touch screen (202) configured to receive touch input,

a processing unit for receiving the electronic signals from the touch screen and translating the signals into touch input data, and

a communication interface configured for:

sending the touch input data to a remotely controlled device (300), the data including at least positional, movement and durational input parameters, wherein the touch input data can be interpreted by the remotely controlled device (300) as one of a plurality of potential commands in a first context of a graphical user interface (GUI) of the remotely controlled device (300) and as another of the plurality of potential commands in a second context of the GUI,

wherein the remotely controlled device is configured for interpreting the touch input data in combination with a current context of the GUI to determine an appropriate command for the current context, and updating the GUI in response to the appropriate command,

characterized in that

the communication interface is further configured for:

receiving information from the remotely controlled device (300) via a bidirectional communication protocol informing the remote controller to display a keyboard on the touch screen, at least partially in response to the information received via the bidirectional communication protocol.

4. A device configured with an application causing the device to function as a remotely controlled device (300) being controlled by a remote controller (200), comprising:

a display for displaying a graphical user interface (GUI),

a communication interface configured for:

receiving touch input data from a remote controller (200), the data including at least positional, movement and durational input parameters, wherein the touch input data can be interpreted by the remotely controlled device (300) as one of a plurality of potential commands in a first context of a graphical user interface (GUI) of the remotely controlled device (300) and as another of the plurality of potential commands in a second context of the GUI,

a processing unit configured for interpreting the touch input data in combination with a current context of the GUI to determine an appropriate command for the current context, and updating the GUI in response to the appropriate command,

characterized in that

the communication interface is further configured for:

sending information via a bidirectional communication protocol informing the remote controller to display a keyboard on the touch screen, at least partially in response to the information received via the bidirectional communication protocol.

5. A computer program product comprising instructions which, when the program is executed by the system of claim 2, to carry out the method of claim 1.

6. A computer program product comprising instructions which, when the program is executed by a computing device comprising a touch screen, a processing unit for receiving electronic signals from the touch screen and a communication interface,

cause the computing device to function as a remote controller (200) for a remotely controlled device,

in that the instructions cause the computing device to:

translate the touch input signal from the touch screen into touch input data,

send the touch input data to a remotely controlled device (300), the data including at least positional, movement and durational input parameters, wherein the touch input data can be interpreted by the remotely controlled device (300) as one of a plurality of potential commands in a first context of a graphical user interface (GUI) of the remotely controlled device (300) and as another of the plurality of potential commands in a second context of the GUI,

wherein the remotely controlled device is configured for interpreting the touch input data in combination with a current context of the GUI to determine an appropriate command for the current context, and updating the GUI in response to the appropriate command,

characterized in that the instructions further cause the computing device to:

receive information from the remotely controlled device (300) via a bidirectional communication protocol informing the remote controller to display a keyboard on the touch screen, at least partially in response to the information received via the bidirectional communication protocol.

7. A computer program product comprising instructions which, when the program is executed by a computing device comprising a display, a processing unit and a communication interface,

cause the device to function as a remotely controlled device (300) being controlled by a remote controller (200),

in that the instructions cause the computing device to:

display a graphical user interface (GUI),

receive touch input data from a remote controller (200), the data including at least positional, movement and durational input parameters, wherein the touch input data can be interpreted by the remotely controlled device (300) as one of a plurality of potential commands in a first context of a graphical user interface (GUI) of the remotely controlled device (300) and as another of the plurality of potential commands in a second context of the GUI,

interpret the touch input data in combination with a current context of the GUI to determine an appropriate command for the current context, and update the GUI in response to the appropriate command,

characterized in that the instructions further cause the computing device to:

send information via a bidirectional communication protocol informing the remote controller to display a keyboard on the touch screen, at least partially in response to the information received via the bidirectional communication protocol.

Abstract

Directional touch remote

R. 47(1)

Title of invention

The present system and method is particularly useful for remotely controlling a device having one or more menus via a remote touch interface having at least an unstructured primary input area. A user can provide inputs to a touch interface without needing to view the interface and yet still achieve the desired response from the remotely controlled device. The primary input area of the touch interface may or may not have a background display, such as on a touch screen, but the primary input area of the touch interface should be unstructured and should not have independently selectable items, buttons, icons or anything of the like. Since the touch interface is unstructured, the user does not have to identify any selectable buttons. Instead the user can input a gesture into the interface and watch the remotely controlled device respond. The system does not provide any other visual confirmation.